Mobile apps are an ideal use case for Speech Recognition, whether it be for hands-free diction, voice interfaces for mobile games, or generating subtitles for video and audio messages.

Apple devices, such as the iPhone, iPad and Apple Watch are powered by iOS, Apple's popular flagship operating system. iOS features it's own Speech Recognition API, but it can be clumsy and verbose to integrate. Crucially, not all languages it supports have on-device recognition and even those that do may choose to stream audio to Apple's servers, introducing privacy concerns and latency.

Fortunately, Picovoice's Speech-to-Text technology does not have these downsides, and integrates seamlessly into the iOS ecosystem.

In addition to iOS, Picovoice's Speech-to-Text engines are compatible in a wide array of environments, such as Android, Linux, macOS, Windows, and modern web browsers (via WebAssembly).

With Speech-to-Text transcription, there are two main approaches: Real-Time and Batch.

Real-Time Speech-to-Text

Real-time Speech-to-Text systems offer text output in real time as a user speaks, mirroring how humans listen and convert speech into text mentally during conversations. A downside to this method is that it can lead to errors arising from auditory or semantic difficulties, which often only become apparent after a sentence is finished. Therefore, it's crucial to take this drawback into account when determining if an application necessitates real-time transcription.

Real-Time Speech-to-Text, Online Automatic Speech Recognition, and Streaming Speech-to-Text all refer to the same core technology.

For iOS devices, Picovoice provides Cheetah Streaming Speech-to-Text, a unique technology that performs all voice recognition in real-time directly on the device. This approach avoids network-related delays and minimizes the latency between the user's speech input and the transcription output.

Below is the list of software development kits (SDKs) supported by Cheetah, along with corresponding code snippets and quick-start guides.

1o = pvcheetah.create(access_key)2

3partial_transcript, is_endpoint =4 o.process(get_next_audio_frame())1const o = new Cheetah(accessKey)2

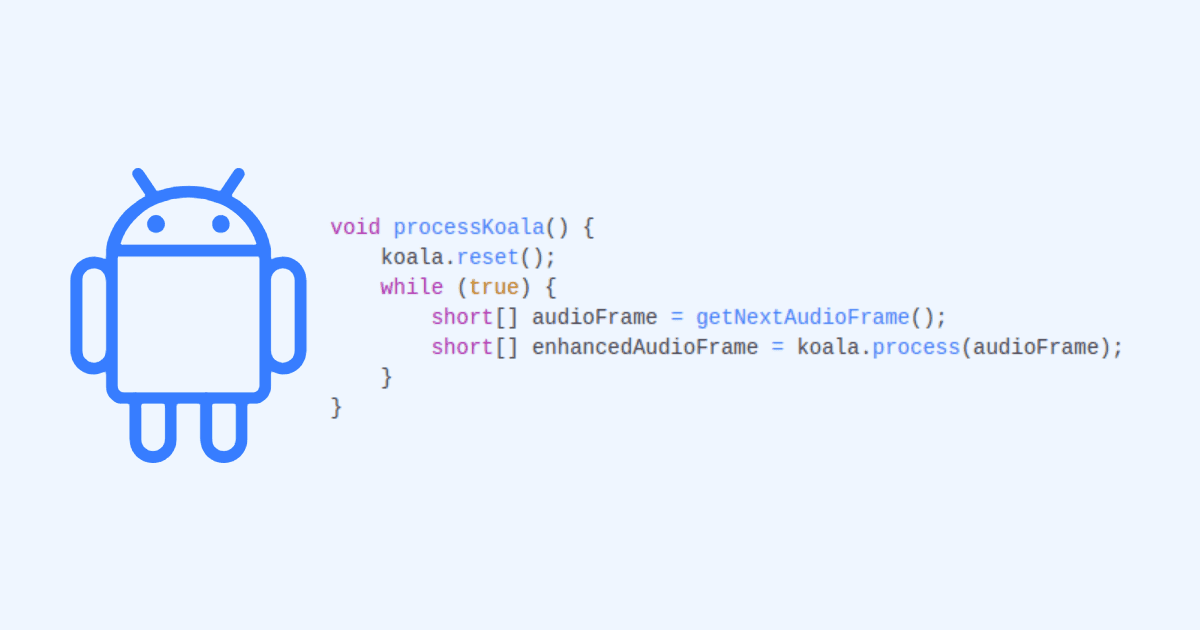

3const [partialTranscript, isEndpoint] =4 o.process(audioFrame);1Cheetah o = new Cheetah.Builder()2 .setAccessKey(accessKey)3 .setModelPath(modelPath)4 .build(appContext);5

6CheetahTranscript partialResult =7 o.process(getNextAudioFrame());1let cheetah = Cheetah(2 accessKey: accessKey,3 modelPath: modelPath)4

5let partialTranscript, isEndpoint =6 try cheetah.process(7 getNextAudioFrame())1Cheetah o = new Cheetah.Builder()2 .setAccessKey(accessKey)3 .build();4

5CheetahTranscript r =6 o.process(getNextAudioFrame());1Cheetah o =2 Cheetah.Create(accessKey);3

4CheetahTranscript partialResult =5 o.Process(GetNextAudioFrame());1const {2 result,3 isLoaded,4 isListening,5 error,6 init,7 start,8 stop,9 release,10} = useCheetah();11

12await init(13 accessKey,14 model15);16

17await start();18await stop();19

20useEffect(() => {21 if (result !== null) {22 // Handle transcript23 }24}, [result])1_cheetah = await Cheetah.create(2 accessKey,3 modelPath);4

5CheetahTranscript partialResult =6 await _cheetah.process(7 getAudioFrame());1const cheetah = await Cheetah.create(2 accessKey,3 modelPath)4

5const partialResult =6 await cheetah.process(7 getAudioFrame())1pv_cheetah_t *cheetah = NULL;2pv_cheetah_init(3 access_key,4 model_file_path,5 endpoint_duration_sec,6 enable_automatic_punctuation,7 &cheetah);8

9const int16_t *pcm = get_next_audio_frame();10

11char *partial_transcript = NULL;12bool is_endpoint = false;13const pv_status_t status = pv_cheetah_process(14 cheetah,15 pcm,16 &partial_transcript,17 &is_endpoint);1const cheetah =2 await CheetahWorker.create(3 accessKey,4 (cheetahTranscript) => {5 // callback6 },7 {8 base64: cheetahParams,9 // or10 publicPath: modelPath,11 }12 );13

14WebVoiceProcessor.subscribe(cheetah);Batch Speech-to-Text

Unlike real-time transcription, Batch Speech-to-Text waits for the complete spoken phrase to complete before providing a transcription. Compared to real-time approaches, this method boasts higher accuracy and runtime efficiency. It can anticipate spoken words, making adjustments for better precision in both linguistic and acoustic aspects. Additionally, it streamlines the process by eliminating the need to switch between listening and transcribing, thus improving overall efficiency.

For iOS-based devices, Picovoice offers Leopard Speech-to-Text, a state-of-the-art technology for batch transcription tasks. Like Cheetah, Leopard processes all voice audio data on device, ensuring privacy by design and compliance with regulations such as HIPAA and GDPR. To further improve accuracy, users can incorporate custom vocabulary and boosting specific phrases via the Picovoice Console.

Below is the list of SDKs supported by Leopard, along with corresponding code snippets and quick-start guides.

1o = pvleopard.create(access_key)2

3transcript, words =4 o.process_file(path)1const o = new Leopard(accessKey)2

3const { transcript, words } =4 o.processFile(path)1Leopard o = new Leopard.Builder()2 .setAccessKey(accessKey)3 .setModelPath(modelPath)4 .build(appContext);5

6LeopardTranscript r =7 o.processFile(path);1let o = Leopard(2 accessKey: accessKey,3 modelPath: modelPath)4

5let r = o.processFile(path)1Leopard o = new Leopard.Builder()2 .setAccessKey(accessKey)3 .build();4

5LeopardTranscript r =6 o.processFile(path);1Leopard o =2 Leopard.Create(accessKey);3

4LeopardTranscript result =5 o.ProcessFile(path);1const {2 result,3 isLoaded,4 error,5 init,6 processFile,7 startRecording,8 stopRecording,9 isRecording,10 recordingElapsedSec,11 release,12} = useLeopard();13

14await init(15 accessKey,16 model17);18

19await processFile(audioFile);20

21useEffect(() => {22 if (result !== null) {23 // Handle transcript24 }25}, [result])1Leopard o = await Leopard.create(2 accessKey,3 modelPath);4

5LeopardTranscript result =6 await o.processFile(path);1const o = await Leopard.create(2 accessKey,3 modelPath)4

5const {transcript, words} =6 await o.processFile(path)1pv_leopard_t *leopard = NULL;2pv_leopard_init(3 access_key,4 model_path,5 enable_automatic_punctuation,6 &leopard);7

8char *transcript = NULL;9int32_t num_words = 0;10pv_word_t *words = NULL;11pv_leopard_process_file(12 leopard,13 path,14 &transcript,15 &num_words,16 &words);1const leopard =2 await LeopardWorker.3 fromPublicDirectory(4 accessKey,5 modelPath6 );7

8const {9 transcript,10 words11} =12 await leopard.process(pcm);