TLDR: Orca Streaming Text-to-Speech:

- Adds streaming text processing capability and becomes PADRI Text-to-Speech - which is optimized for LLMs, with the capability of continuously processing text inputs as they appear.

- Continues to support async processing. In other words, it returns audio from text, using a request-response model.

- Is private-by-design and processes data without sending it to third-party remote servers.

- Runs on any platform; embedded, mobile, web, desktop, on-prem, private, or public cloud including serverless.

Text-to-Speech is one of the most known and widely used voice AI technologies, enabling use cases across industries:

- Voicebots

- Interactive Voice Response Systems (IVRs)

- Industrial Voice Assistants

- Information and Announcement Systems

- Real-time Translation

- Accessibility

- Publishing

Text-to-Speech users have two distinctive expectations:

- Quality

- Response time

1. High-quality Text-to-Speech

The quality of a Text-to-Speech engine refers to how similar the produced speech is to a “real” human voice. Media, entertainment, and publishing industries leverage high-quality Text-to-Speech to narrate books, movies, games, broadcasts, or podcasts. Text-to-Speech can reduce voice-over costs while offering more flexibility on speed, pitch, intonation, etc.

2. Fast and Responsive Text-to-Speech

Response time is crucial for voicebots, specifically those that aim to increase productivity. A timely response while picking up items in a warehouse or ordering food at a drive-thru is critical for the continuity of human-like interactions.

Challenges in Text-to-Speech

High-quality Text-to-Speech is generally used for pre- or post-production. Quality is prioritized when there is a trade-off between Text-to-Speech quality and response time. Since the volume for these use cases is typically low, the average cost per minute can be negligible.

On the other hand, a balance between speed and quality of Text-to-Speech is needed for use cases such as voicebots and virtual customer service agents. These high-volume use cases also require enterprises to be mindful of costs.

The recent advances in AI, such as large transformer models, have made high-quality machine-generated voices and voice cloning more accessible. However, these large models require significant resources, causing cloud dependency. Despite the efforts of Text-to-Speech API providers, inherent limitations of cloud computing limit their capability to improve response time and reduce costs.

Why another Text-to-Speech engine?

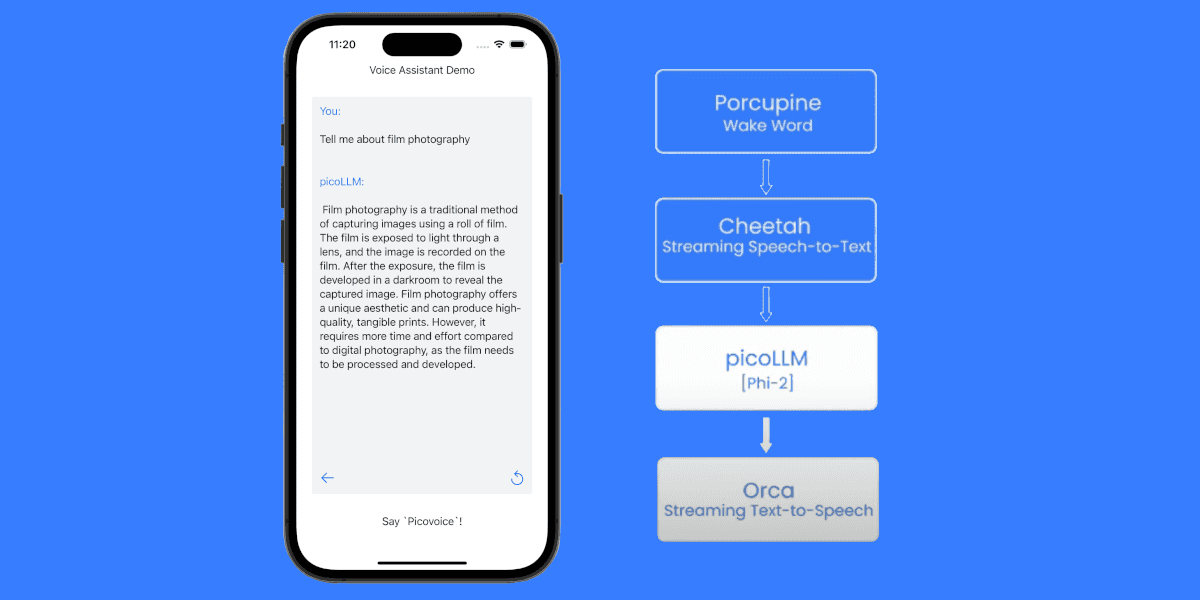

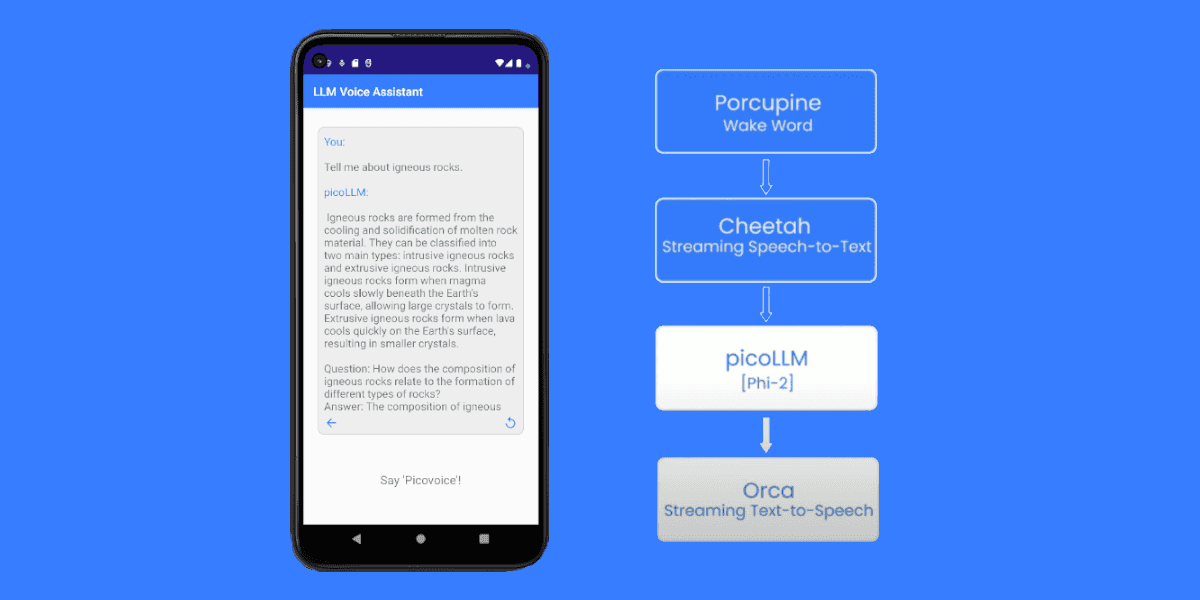

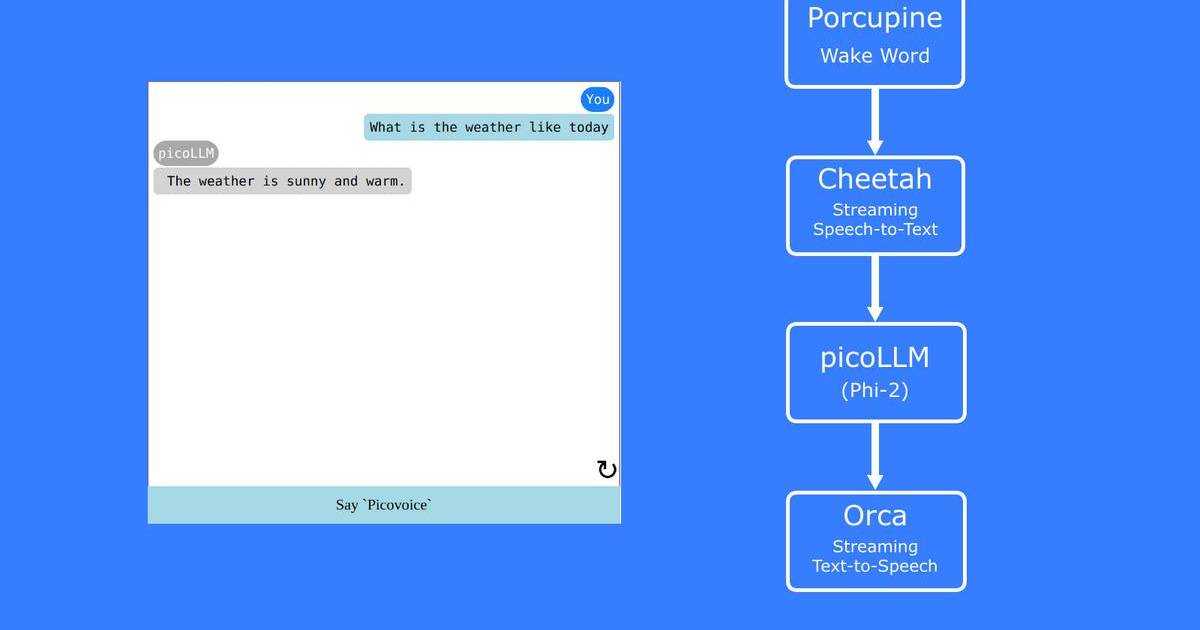

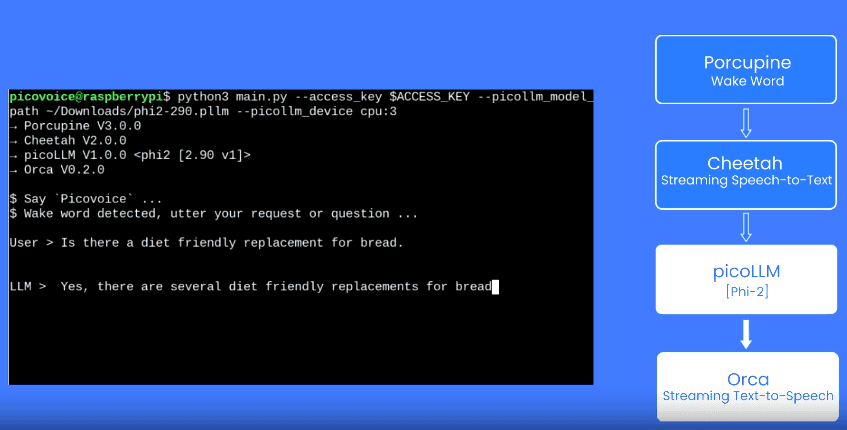

Orca is not just another Text-to-Speech engine. On-device Text-to-Speech, unlike cloud Text-to-Speech APIs, eliminates network latency and offers guaranteed response time. But it’s not enough. In the LLM era, the TTS engines should be PADRI. Running de-centralized models minimizes infrastructure costs. Converting text to speech locally without having to send anything to remote servers is the only way to ensure privacy. Yet, training an on-device Text-to-Speech that enables human-like interactions, just like cloud APIs, while being resource-efficient to run across platforms is challenging. That’s why we built Orca - leveraging Picovoice’s expertise in training efficient on-device voice AI models without sacrificing performance.

Meet Orca Streaming Text-to-Speech

Orca Streaming Text-to-Speech is a lightweight Text-to-Speech engine that converts text to speech locally, offering fast and private experiences without sacrificing human-like quality.

Orca Streaming Text-to-Speech is:

- able to process both streaming and pre-defined text

- capable of generating both audio output streams and files

- compact and computationally efficient, running across platforms:

- private and fast, powered by on-device processing

- easy to use with a few lines of code

Start Building!

Developers can start building with Orca Streaming Text-to-Speech for free by signing up for the Picovoice Console.

1orca = pvorca.create(access_key)2

3stream = orca.stream_open()4

5speech = stream.synthesize(6 get_next_text_chunk())1const o = new Orca(accessKey);2

3const s = o.streamOpen();4

5const pcm = s.synthesize(6 getNextTextChunk());1Orca o = new Orca.Builder()2 .setAccessKey(accessKey)3 .setModelPath(modelPath)4 .build(appContext);5

6OrcaStream stream =7 o.streamOpen(8 new OrcaSynthesizeParams9 .Builder()10 .build());11

12short[] speech =13 stream.synthesize(14 getNextTextChunk());1let orca = Orca(2 accessKey: accessKey,3 modelPath: modelPath)4

5let stream = orca.streamOpen()6

7let speech =8 stream.synthesize(9 getNextTextChunk())1const o =2 await OrcaWorker.create(3 accessKey,4 modelPath);5

6const s = await o.streamOpen();7

8const speech =9 await s.synthesize(10 getNextTextChunk());1Orca o =2 Orca.Create(accessKey);3

4Orca.OrcaStream stream =5 o.StreamOpen();6

7short[] speech =8 stream.Synthesize(textChunk);