TLDR: Private, Efficient, Fast, Accurate, Ready-to-Use Speaker Recognition & Identification with Eagle. New Eagle is optimized for real-time conversations, capturing speaker changes immediately. It is much faster, more efficient, and more accurate than alternatives proven by open-source benchmarks.

Speaker Recognition analyzes distinctive voice characteristics to identify and verify speakers. It is the technology behind voice authentication, speaker-based personalization, and speaker spotting, enabling use cases across industries:

- IVR personalization to tailor messages, menus, and treatments

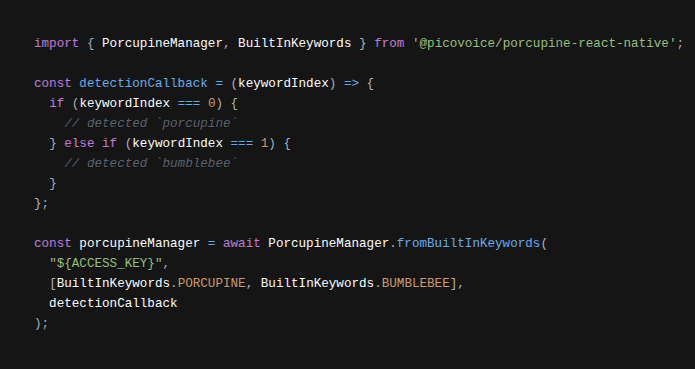

- Custom settings with wake word enrollment, e.g., Alexa Voice ID

- Wake word false acceptance and false rejection minimization, e.g., Siri Recognize Only My Voice

- Caller authentication in telephone banking, e.g., Barclays voice authentication

- Speaker identification in virtual and hybrid meetings

Challenges

Speaker Recognition is a challenging technology to build, given the complex nature of the human voice. Anatomic and behavioral differences, such as the shapes of our mouths and throats, pitch, tone, and speaking patterns affect our voice characteristics. Acoustic environments, such as background noise, echo, or distance, and phonetic variability of languages and phrases add another layer of complexity.

Why another Speaker Recognition engine?

Picovoice initially built Eagle Speaker Recognition for internal use. Later, we decided to share it with the public because we couldn't find a production-ready, accurate, and cross-platform Speaker Recognition engine. Not just legacy players, but also Big Tech require developers to go through a long sales process before revealing their SDKs. For example, Microsoft Azure AI Speaker Recognition grants limited access in selected languages to "approved" customers only. Microsoft later announced that Azure AI Speaker Recognition is retiring on September 30, 2025.

Meet Eagle Speaker Recognition

Eagle Speaker Recognition is:

- compact and computationally efficient, running across platforms:

- private and reliable, powered by on-device voice processing

- language-agnostic and text-independent

- highly accurate, proven by open-source benchmarks

- easy to use with a simple enrollment process

Start Building

Developers can start building with Eagle Speaker Recognition by signing up for the Picovoice Console for free.

1# Speaker Enrollment2o = pveagle.create_profiler(access_key)3while percentage < 100:4 percentage, feedback = o.enroll(5 get_next_enroll_audio_data())6speaker_profile = o.export()7

8# Speaker Recognition9eagle = pveagle.create_recognizer(10 access_key,11 speaker_profile)12while True:13 scores = eagle.process(14 get_next_audio_frame())1// Speaker Enrollment2const p = new EagleProfiler(3 accessKey);4while (percentage < 100) {5 const result: EnrollProgress =6 p.enroll(enrollAudioData);7 percentage = result.percentage;8}9const speakerProfile =10 p.export();11

12// Speaker Recognition13const e = new Eagle(14 accessKey,15 speakerProfile);16

17while (true) {18 const scores: number[] =19 e.process(audioData);20}1// Speaker Enrollment2EagleProfiler o = new EagleProfiler.Builder()3 .setAccessKey(accessKey)4 .build();5

6EagleProfilerEnrollResult result = null;7while (result != null && result.getPercentage() < 100.0) {8 result = o.enroll(getNextEnrollAudioData());9}10EagleProfile speakerProfile = o.export();11

12// Speaker Recognition13Eagle eagle = new Eagle.Builder()14 .setAccessKey(accessKey)15 .setSpeakerProfile(speakerProfile)16 .build();17

18while true {19 float[] scores = eagle.process(getNextAudioFrame());20}1// Speaker Enrollment2let o = try EagleProfiler(accessKey: accessKey)3while (percentage < 100.0) {4 (percentage, feedback) = o.enroll(5 pcm: get_next_enroll_audio_data())6}7let speakerProfile = try o.export()8

9// Speaker Recognition10let eagle = Eagle(11 accessKey: accessKey,12 speakerProfile: speakerProfile)13

14while true {15 let profileScores = try eagle.process(16 pcm: get_next_audio_frame())17}1// Speaker Enrollment2pv_eagle_profiler_t *eagle_profiler = NULL;3pv_eagle_profiler_init(access_key, model_path, &eagle_profiler);4 5float enroll_percentage = 0.0f;6pv_eagle_profiler_enroll(7 eagle_profiler,8 get_next_enroll_audio_frame(),9 get_next_enroll_audio_num_samples(),10 &feedback,11 &enroll_percentage);12int32_t profile_size_bytes = 0;13void *speaker_profile = malloc(profile_size_bytes);14pv_eagle_profiler_export(eagle_profiler, speaker_profile);15

16// Speaker Recognition17pv_eagle_t *eagle = NULL;18pv_eagle_init(19 access_key,20 model_path,21 1,22 &speaker_profile,23 &eagle);24 25float score = 0.f;26pv_eagle_process(eagle, pcm, &score);1// Speaker Enrollment2const eagleProfiler = 3 await EagleProfilerWorker.create(4 accessKey,5 eagleModel);6while (percentage < 100) {7 const result: EagleProfilerEnrollResult =8 await eagleProfiler.enroll(9 enrollAudioData);10 percentage = result.percentage;11}12const speakerProfile: EagleProfile =13 eagleProfiler.export();14

15// Speaker Recognition16const eagle = await EagleWorker.create(17 accessKey,18 eagleModel,19 speakerProfile);20

21while (true) {22 const scores: number[] =23 await eagle.process(audioData);24}