From AI agents and real-time coaching to meeting transcription, speech-to-text brings new ideas to life. Cheetah Streaming Speech-to-Text has created even more opportunities with cloud-level accuracy on the device in real time, combining the best of the cloud and on-device processing.

Now, Cheetah Streaming Speech-to-Text officially supports five new languages: French, German, Italian, Portuguese, and Spanish. More developers can build private and cost-effective AI applications with zero network latency using accurate, production-ready, cross-platform Cheetah Streaming Speech-to-Text.

On-device transcription with cloud-level accuracy within your web browser

Picovoice’s web demos leverage its web SDKs. They run within your web browser, meaning the audio is processed locally without using 3rd party cloud services.

Real-time transcription with Cheetah Streaming Speech-to-Text

Test Cheetah Streaming Speech-to-Text in English or change the language to French, German, Italian, Portuguese, and Spanish to test out the new languages.

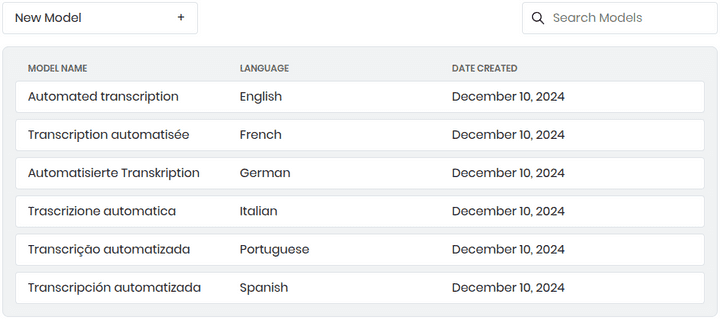

Train Use-case Specific Speech Models

Cheetah Streaming Speech-to-Text offers cloud-level accuracy out-of-the-box. However, some use cases and industries, such as healthcare, finance, or legal, have special terminology that cannot be accurately predicted by generic speech models. Custom Vocabulary & Keyword Boosting features allow developers to customize speech-to-text models specific to their application on the no-code Picovoice Console.

Start Building Now

Building with intuitive and cross-platform speech-to-text SDKs doesn’t require any experience in Machine Learning. Anyone can start transcribing with a few lines of code.

1o = pvcheetah.create(access_key)2

3partial_transcript, is_endpoint =4 o.process(get_next_audio_frame())1const o = new Cheetah(accessKey)2

3const [partialTranscript, isEndpoint] =4 o.process(audioFrame);1Cheetah o = new Cheetah.Builder()2 .setAccessKey(accessKey)3 .setModelPath(modelPath)4 .build(appContext);5

6CheetahTranscript partialResult =7 o.process(getNextAudioFrame());1let cheetah = Cheetah(2 accessKey: accessKey,3 modelPath: modelPath)4

5let partialTranscript, isEndpoint =6 try cheetah.process(7 getNextAudioFrame())1Cheetah o = new Cheetah.Builder()2 .setAccessKey(accessKey)3 .build();4

5CheetahTranscript r =6 o.process(getNextAudioFrame());1Cheetah o =2 Cheetah.Create(accessKey);3

4CheetahTranscript partialResult =5 o.Process(GetNextAudioFrame());1const {2 result,3 isLoaded,4 isListening,5 error,6 init,7 start,8 stop,9 release,10} = useCheetah();11

12await init(13 accessKey,14 model15);16

17await start();18await stop();19

20useEffect(() => {21 if (result !== null) {22 // Handle transcript23 }24}, [result])1_cheetah = await Cheetah.create(2 accessKey,3 modelPath);4

5CheetahTranscript partialResult =6 await _cheetah.process(7 getAudioFrame());1const cheetah = await Cheetah.create(2 accessKey,3 modelPath)4

5const partialResult =6 await cheetah.process(7 getAudioFrame())1pv_cheetah_t *cheetah = NULL;2pv_cheetah_init(3 access_key,4 model_file_path,5 endpoint_duration_sec,6 enable_automatic_punctuation,7 &cheetah);8

9const int16_t *pcm = get_next_audio_frame();10

11char *partial_transcript = NULL;12bool is_endpoint = false;13const pv_status_t status = pv_cheetah_process(14 cheetah,15 pcm,16 &partial_transcript,17 &is_endpoint);1const cheetah =2 await CheetahWorker.create(3 accessKey,4 (cheetahTranscript) => {5 // callback6 },7 {8 base64: cheetahParams,9 // or10 publicPath: modelPath,11 }12 );13

14WebVoiceProcessor.subscribe(cheetah);