Search Audio & Video Libraries—Instantly, Privately, On‑Device

Overview

Picovoice's Speech-to-Index engine transforms audio/video into searchable indexes—letting users query content by phonetic match, intent, or keyword—all entirely locally.

👤 Who this is for

Use Case Scenarios

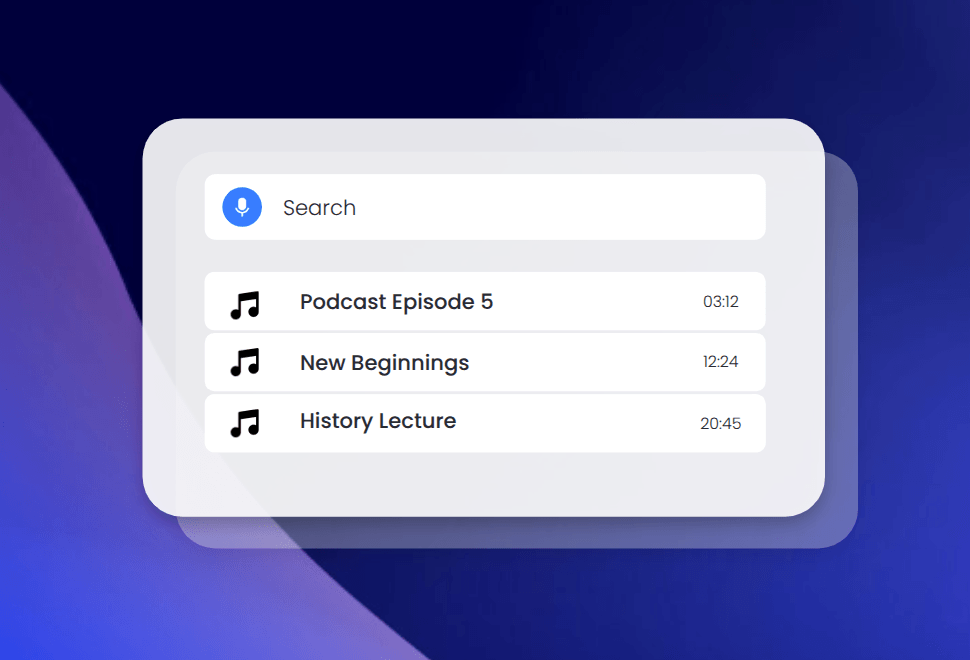

Search Within Podcast Libraries

Listeners want to find clips with a specific quote or topic—without manually transcribing.

- Engine returns timestamps & clips instantly—no cloud needed.

Enterprise Call Archives

Compliance teams reviewing thousands of calls need to find mentions like "insider" or "confidential."

- Phonetic search surfaces precise audio segments in seconds—locally.

Lecture & Audio Archive Discovery

Educational repositories want to let users search by phrase within audio/video.

- Relevant lecture minutes are matched and highlighted—on-device.

Key benefits

- Lightning-fast indexing and search—no delays

- Full control over content—nothing leaves the device

- Better accuracy for spoken words—less misinterpretation from Speech-to-Text

- Infinite scale—search across libraries of any size

- Ultra-efficient performance—runs on browsers, servers, embedded systems

- Predictable licensing—no cloud or per-query search fees after indexing

Why Picovoice On-device Voice AI for Audio Search?

Related Products: Build a Custom Audio Search Engine

Voice Content Moderation with AI

Speech to Text Alternatives

Voice Search: How to Find Spoken Keywords and Phrases in Audio Files

Unlocking the Value of Dark Voice Data

Podcast Transcription and Search Engine: New Era for Podcast Publishers

On-device Voice Recognition with Picovoice: 2021 Wrapped

Frequently asked questions

Yes. Unlike traditional cloud speech-to-text APIs, on-device Speech-to-Index generates compact phonetic indexes that allow near-instant search without converting entire audio streams to text. This also cuts costs by eliminating the need for cloud compute or playback processing. It's an efficient alternative for large-scale, searchable audio archives.

In many cases, Speech-to-Index performs better than traditional Speech-to-Text engines—especially for slang, proper nouns, and regional accents. Phoneme-based matching helps surface terms that might otherwise be missed due to spelling variations or pronunciation differences.

Yes. The system is designed to scale efficiently—whether you're indexing a few hours of audio or entire call archives. Index once and enable fast, local queries across massive media libraries.

No. One of the key benefits of Picovoice's Speech-to-Index is that it operates entirely on-device or on standard infrastructure. You can run it directly in web browsers, desktop environments, or on lightweight servers—no GPU, no cloud costs, and no data privacy risks associated with third-party hosting.

Picovoice Speech-to-Index is currently in beta and available exclusively to Enterprise Plan customers. If you're already a Picovoice customer, please contact your Picovoice representative. If you're interested in becoming a customer, get in touch with us to learn more.