TLDR: Build a smart IVR system for AI call center automation in Python using on-device voice AI. This tutorial shows how to implement wake word detection, intent recognition, local LLM reasoning, and low-latency conversational IVR without cloud dependencies.

Contact center AI depends on fast, accurate voice processing to handle customer queries effectively. On-device processing eliminates network latency entirely, enabling conversational IVR that responds faster than cloud-based alternatives.

Traditional call center IVR or interactive voice response systems frustrate customers with rigid menu trees and slow cloud processing. Callers navigate numbered options, repeat information multiple times, and wait through network round-trips that add 1-2 seconds of latency per interaction. Cloud-based voice APIs compound this latency. For example, speech recognition takes 920ms with Amazon Transcribe Streaming, and text-to-speech adds another 340ms with services like ElevenLabs. Text-based intent classification also increases processing time while reducing accuracy compared to direct speech-to-intent.

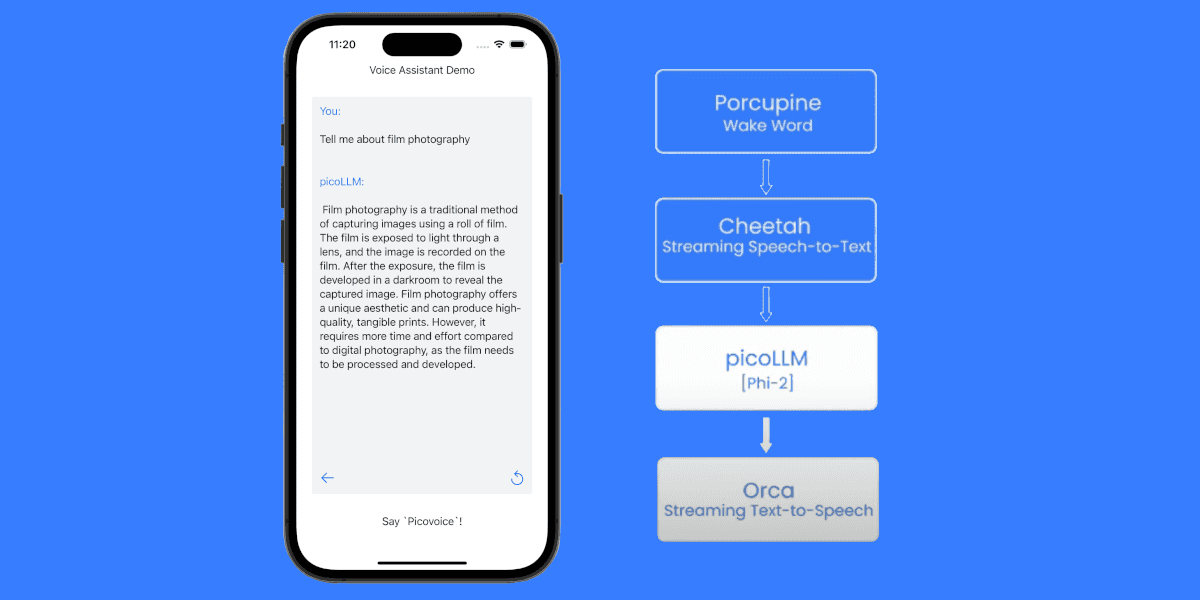

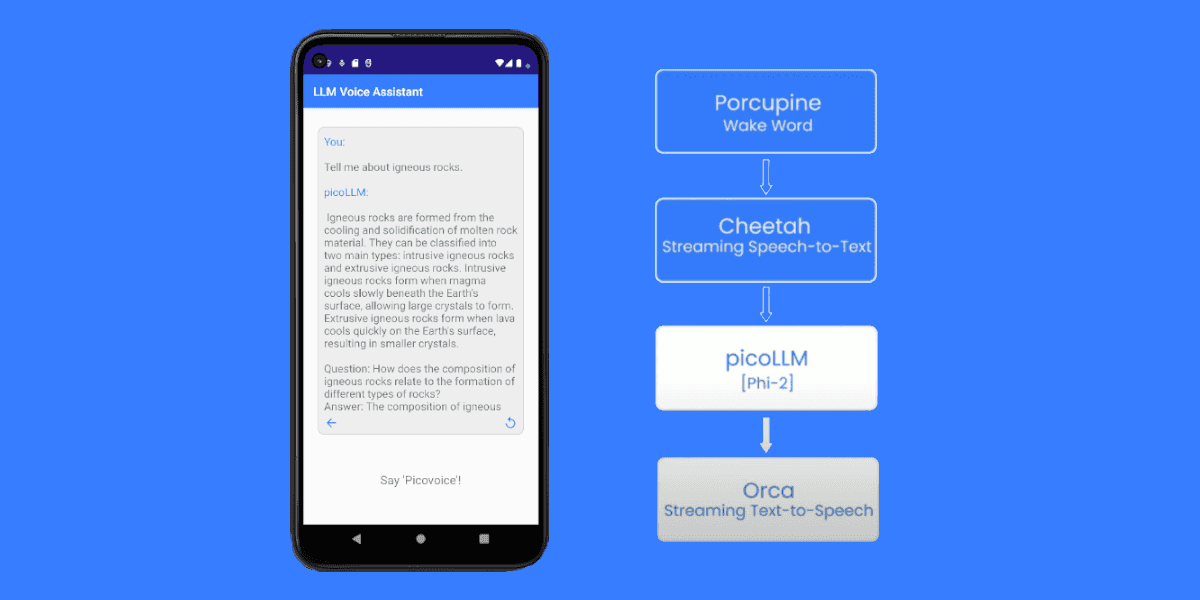

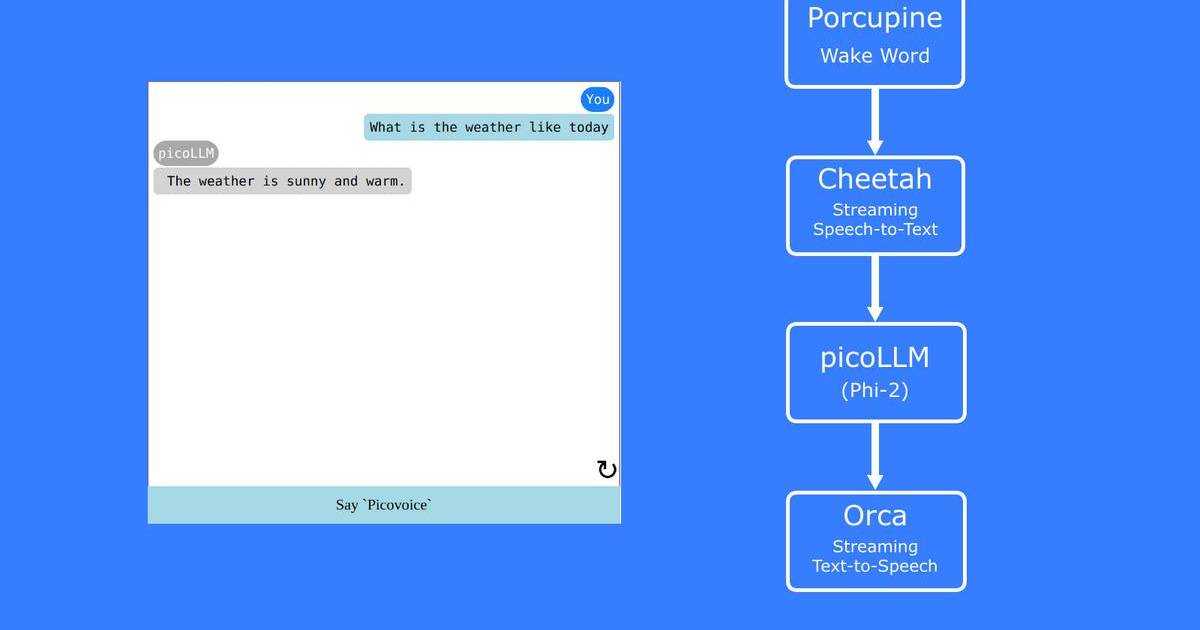

This tutorial shows how to build a Python IVR system for an AI call center that routes customer service queries between intent recognition and LLM reasoning. The implementation uses Porcupine Wake Word for voice activation, Rhino Speech-to-Intent for intent recognition, Cheetah Streaming Speech-to-Text and picoLLM for complex user queries, and Orca Streaming Text-to-Speech for natural responses.

What You'll Build:

A conversational IVR system that:

- Activates with a custom wake word

- Handles common queries instantly using speech-to-intent recognition

- Routes unrecognized queries to a local language model for reasoning

- Responds with natural speech synthesis

What You'll Need:

- Python 3.9+

- A desktop or laptop with microphone and speakers

- Picovoice

AccessKeyfrom the Picovoice Console

To learn more about the advantages and challenges of voice AI agents in customer service, see: Voice AI Agents in Customer Service.

Smart IVR Architecture: Intelligent Call Routing with Speech Recognition

The smart IVR system uses a two-tier approach to handle customer queries efficiently:

Intent Recognition: When a customer speaks, Rhino Speech-to-Intent processes the audio directly. If the customer service voicebot recognizes a known intent with required parameters (e.g., "check order status for order 12345"), it responds immediately. This handles the majority of routine customer service queries with minimal latency.

LLM Reasoning: If Rhino returns is_understood=False for ambiguous or complex queries (e.g., "why was I charged twice when I cancelled my order?"), the system prompts the customer to provide more details, then uses Cheetah Streaming Speech-to-Text to transcribe the explanation and routes it to picoLLM for intelligent reasoning.

This AI IVR architecture optimizes for common cases while handling edge cases flexibly.

Train a Wake Word for Voice Activation

- Navigate to the Porcupine page in Picovoice Console.

- Enter your wake phrase (e.g., "Hey Assistant") and test it.

- Click "Train", select your platform, and download the

.ppnmodel file.

Create Custom Voice Commands for Customer Service Automation

Rhino requires a context file that defines the specific intents the smart IVR will handle. A context specifies the phrases customers might say and what structured data to extract.

- Sign up for a Picovoice Console account and navigate to the Rhino page.

- Click "Create New Context" and name it

CustomerService. - Click the "Import YAML" button in the top-right corner and paste the following context definition:

- Test the context in the browser using the microphone button.

- Download the

.rhncontext file for your target platform.

For production-ready customer service voicebots, expand the context to cover 10-15 common intents. Rhino's expression syntax supports optional phrases, synonyms, and slot types like numbers and dates. See the Rhino Expression Syntax Cheat Sheet for details.

Set up a Local LLM

picoLLM runs compressed language models efficiently on-device for call center automation. Download a model from the picoLLM Console:

- Sign in to Picovoice Console and navigate to picoLLM.

- Select a model. This tutorial uses

llama-3.2-3b-instruct-505.pllm. - Click "Download" and place it in your project directory.

Set Up the Python Environment

Install all required SDKs:

Implement Wake Word Detection

The smart IVR begins by listening for the wake word before processing customer queries:

Implement Real-Time Speech-to-Text and Intent Recognition

After wake word detection, the conversational IVR captures audio and processes it through Rhino Speech-to-Intent to detect known intents:

Build Intelligent Call Routing Logic for AI IVR Systems

The intelligent call routing logic determines whether to handle the query with intent recognition or route to picoLLM for reasoning:

Handle Complex Queries with Speech-to-Text

When Rhino Speech-to-Intent doesn't recognize an intent, prompt the customer for more details and use Cheetah Streaming Speech-to-Text to transcribe their explanation:

Add Local LLM Reasoning for Complex Queries

When Rhino Speech-to-Intent cannot extract a structured intent, picoLLM provides intelligent reasoning while maintaining on-device processing:

Add Text-to-Speech for Conversational IVR

The conversational IVR converts text responses into natural speech using Orca:

Complete Python Code for Call Center Automation

This complete implementation combines all components into a smart IVR for call center automation:

Run the Smart IVR System

To run the Smart IVR system in Python, update the model paths to match your local files and have your Picovoice AccessKey ready:

The customer service voicebot will listen for your wake word, process customer queries with intelligent call routing, and respond with natural speech.

Extending The AI Customer Service Voicebot

Connect to Phone Systems:

- Integrate with VoIP platforms like Twilio or Asterisk.

- Route PvSpeaker output back to the phone line for two-way conversations.

Add Multilingual Support:

- Create Speech-to-Intent contexts for multiple languages and use Porcupine's multilingual wake word detection to automatically route to the appropriate language context.

- Orca Streaming Text-to-Speech also supports multiple languages for voice responses.

Database Integration:

- Replace the mock responses in

handle_structured_intent()with actual database queries to retrieve real customer data, order statuses, and account information.

Conversation Analytics:

- Log all transcripts, detected intents, and LLM responses to track common queries, measure resolution rates, and identify areas where the context needs expansion or LLM responses need refinement.

Human Handoff:

- Implement a queue system for the

speakToHumanintent that connects to your existing call center software or creates tickets for callback scheduling.

You can start building your own commercial or non-commercial call center automation projects using Picovoice's self-service Console.

Start Building