Detect when users start or stop speaking in real time

Production-ready, lightweight, cross-platform voice activity detection powered by deep learning, doubling the accuracy of WebRTC VAD.

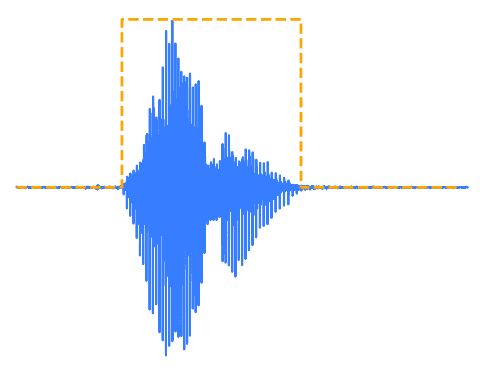

Cobra Voice Activity Detection (VAD) is software that scans audio streams to identify the presence of human speech in real time.

Production-ready Cobra Voice Activity Detection enables adding highly accurate voice detection to any platform in minutes.

Get started with just a few lines of code

1c = pvcobra.create(access_key)2

3while True:4 is_voiced =5 c.process(audio_frame())1const c = new Cobra(accessKey)2

3const voiceProbability =4 c.process(audioFrame);1Cobra c = new Cobra(accessKey);2 3while(true) {4 float isVoiced =5 c.process(audioFrame());6}1let c =2 Cobra(accessKey: accessKey)3

4while true {5 let isVoiced =6 c.process(audioFrame())7}1let c = 2 await CobraWorker.create(3 accessKey, 4 (isVoiced) => {5 // callback6 })7

8const processor = 9 WebVoiceProcessor.instance()10await processor.subscribe(c)1Cobra o =2 Cobra(accessKey);3

4float voiceProbability =5 o.Process(GetNextAudioFrame());1pv_cobra_init(2 access_key,3 &cobra);4

5while (true) {6 pv_cobra_process(7 cobra,8 audio_frame(),9 &is_voiced);10}Cobra Voice Activity Detection has everything that enterprise developers need: Twice the webRTC VAD accuracy, ease of use, cross-platform SDKs, and enterprise support. The lightweight on-device voice activity detection model eliminates network latency and processes data without introducing any compute latency.

Traditional alternatives like webRTC VAD use classic signal processing, and open-source alternatives use models or runtimes like ONNX and Pytorch built for research or other purposes, limiting the accuracy and resource efficiency one can achieve, requiring more compute power or sacrificing accuracy.

Why choose Cobra Voice Activity Detection over other Voice Activity Detection Tools?

- Production-ready model

- Intuitive SDK

- 3 Monthly Active Users

Complete Tutorial: Voice Activity Detection in C

How to Add Voice Activity Detection to a .NET App

Choosing the Best Voice Activity Detection in 2026: Cobra vs Silero vs WebRTC VAD

Voice Activity Detection (VAD): The Complete 2026 Guide to Speech Detection

How to Record Audio in .NET

Cobra VAD V2.1 Release — Same footprint with Superior Accuracy

Frequently asked questions

Voice activity detection (VAD) is a technology used to detect the presence of human speech within an audio signal. That is why it is also known as speech activity detection, speech detection, or voice detection. VAD is essential to enable Automatic Speech Recognition (ASR).

Standard VAD detects the presence of any human speech. For speaker identification, you'd need a separate speaker recognition system that can work alongside VAD.

Check out Eagle Speaker Recognition for speaker recognition and voice identification.

Voice Activity Detection (VAD) detects any human speech, while Wake Word Detection listens for specific trigger phrases. VAD can be used to activate software whenever someone speaks, regardless of what is said. In contrast, Wake Word Detection only activates software when a particular word or phrase is spoken.

Check out Porcupine Wake Word for wake word detection and keyword spotting.

Voice ID, also known as voice biometrics or speaker recognition, identifies who is speaking by analyzing the unique vocal characteristics of pre-registered users. In contrast, VAD simply detects whether anyone is speaking, without identifying them.

Check out Eagle Speaker Recognition for speaker recognition and voice identification.

Enterprises may have different expectations from Voice Activity Detectors. Cobra Voice Activity Detection is the best Voice Activity Detector for those looking for accurate, cross-platform, resource-efficient, and ready-to-deploy VAD.

Traditional voice activity detection (VAD) algorithms, including the widely used WebRTC VAD, rely on statistical models such as Gaussian Mixture Models (GMMs). These are outdated techniques that offer limited adaptability to modern, real-world conditions. Newer VAD solutions built on open-source models do not control the full development pipeline. They depend on pre-trained models and third-party frameworks such as general-purpose runtimes -PyTorch, ONNX, and TensorFlow- which limit fine-grained optimizations and often come with unnecessary overhead, resulting in constrained optimization and limited adaptability.

Picovoice takes a fundamentally different approach. We build our entire stack from the ground up and own the entire data pipeline and training infrastructure, enabling full end-to-end optimization. This allows Cobra VAD to be:

- Smaller

- Faster

- More accurate

- Optimized for real-time

- Optimized for cross-platform deployment

- Flexible for further optimization

This architectural difference enables Cobra to deliver cloud-level accuracy on edge devices, without the latency, power, or memory costs typically associated with deep learning models.

The typical voice activity detection algorithms, including the most popular WebRTC VAD, use learned statistical models such as the Gaussian mixture model. It's an old technique. That's why WebRTC VAD is good, computationally efficient, and works for streaming audio signals but not great. Cobra Voice Activity Detection uses deep learning, achieving the highest accuracy across all platforms.

Cobra Voice Activity Detection processes real-time conversations or recordings on-device, resulting in private, HIPAA, CCPA, and GDPR-compliant experiences. Cobra Voice Activity Detection can run on web browsers, mobile applications, IoT devices, laptops, or servers wherever the data resides.

Cobra Voice Activity Detection works standalone but also pairs up with other engines and enables several use cases. For example, developers combine Cobra Voice Activity Detection with Rhino Speech-to-Intent for QSR drive-thru voice assistants, with Cheetah Streaming Speech-to-Text for real-time agent coaching, or LLM-powered voice assistants, enabling them to start or stop responding depending on whether human speech or silence is detected, and with Leopard Speech-to-Text for cost-effective audio transcription.

Yes! Reach out to the Picovoice Consulting team to get Cobra Voice Activity Detection ported to your platform or a custom voice activity detector trained for you.

Picovoice docs, blog, Medium posts, and GitHub are great resources to learn about voice AI, Picovoice technology, and how to start building voice-activated products. Enterprise customers get dedicated support specific to their applications from Picovoice Product & Engineering teams. While Picovoice customers reach out to their contacts, prospects can also purchase Enterprise Support before committing to any paid plan.